米游社 2.62.2 版本 salt 获取

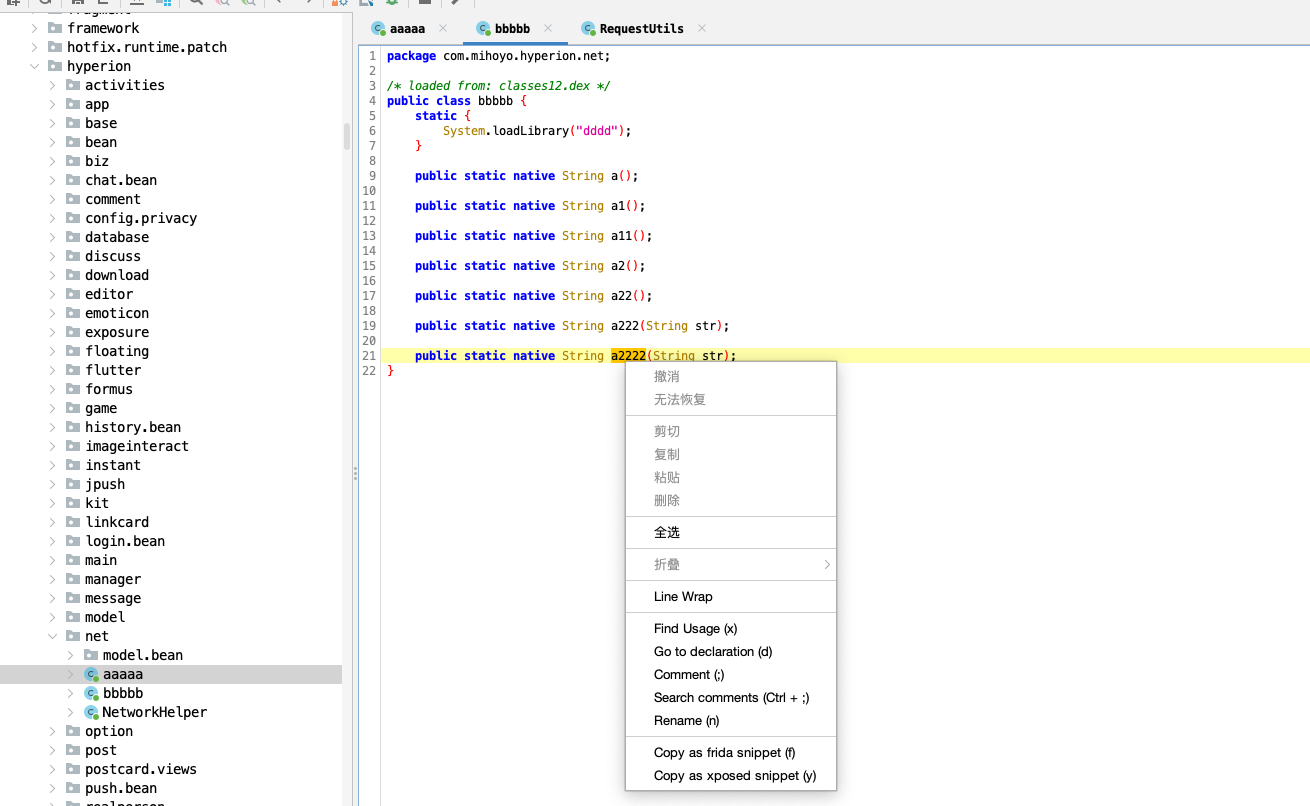

首先从 miyoushe 官网下载 apk,然后使用 jadx 反编译 apk。

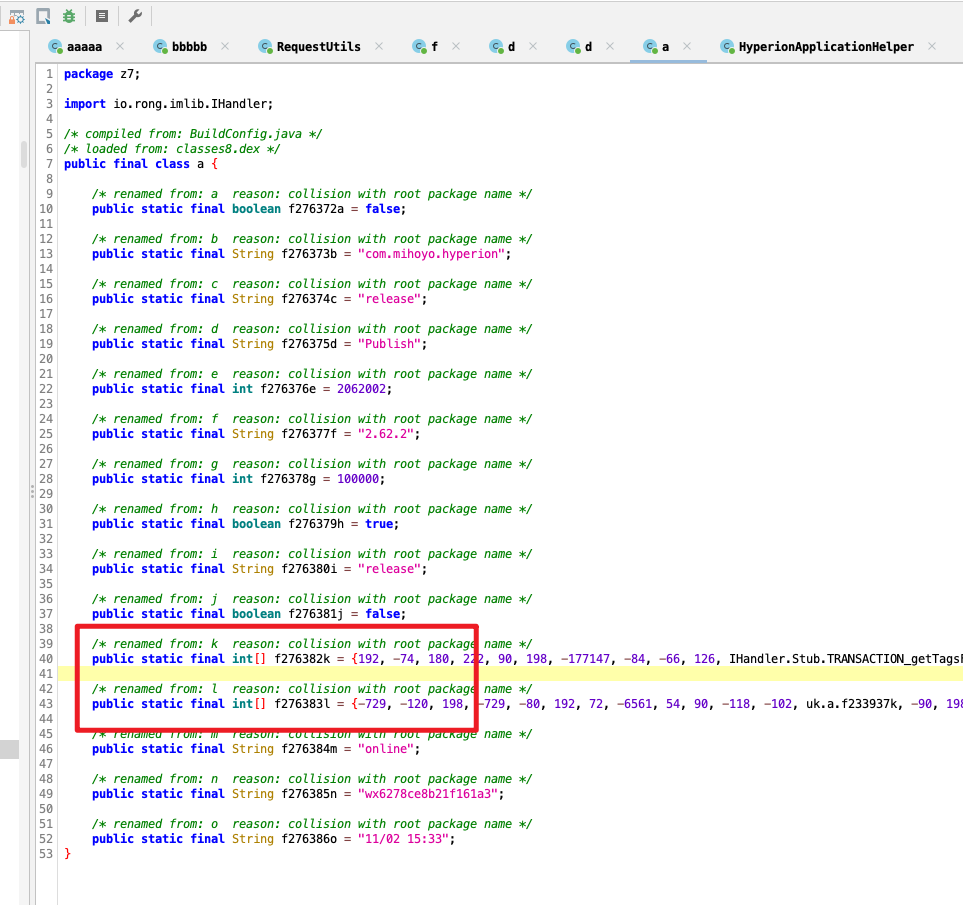

从 文章 中可知生成 DS 算法的位置位于 com.mihoyo.hyperion.net 包内,

使用 jadx 打开到对应目录,可以找到 bbbbb.a2222、 aaaaa.a2222、 aaaaa.b5555,分别为 DS1 DS2 的算法实现。

右键方法名,选择 Find Usage,可以看到对应的实现。

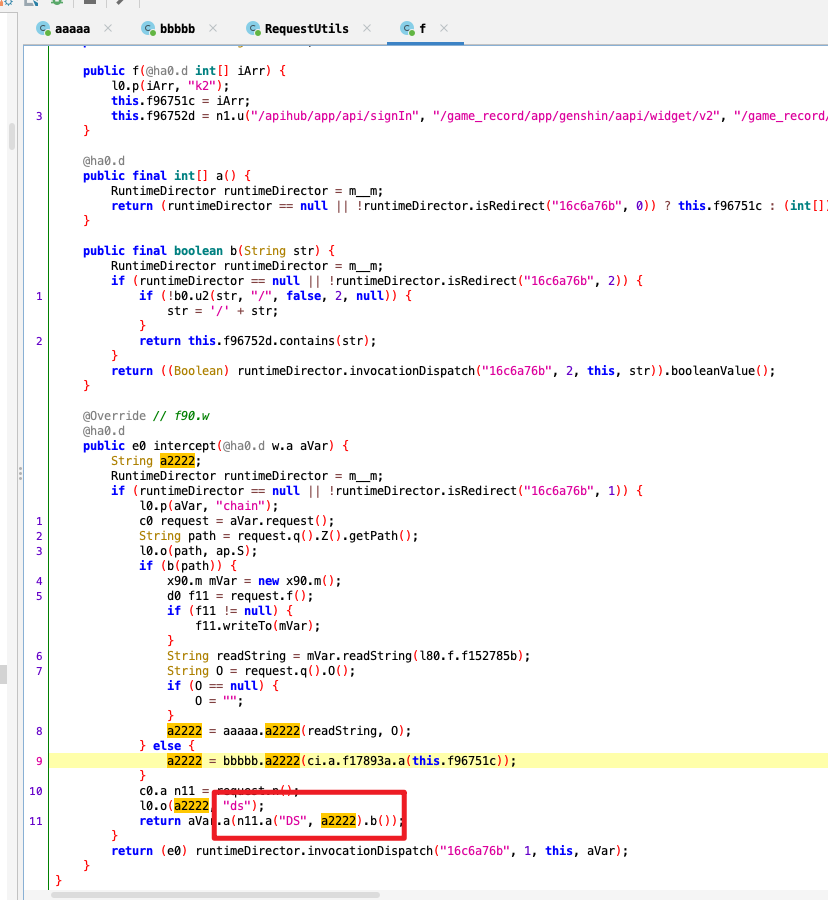

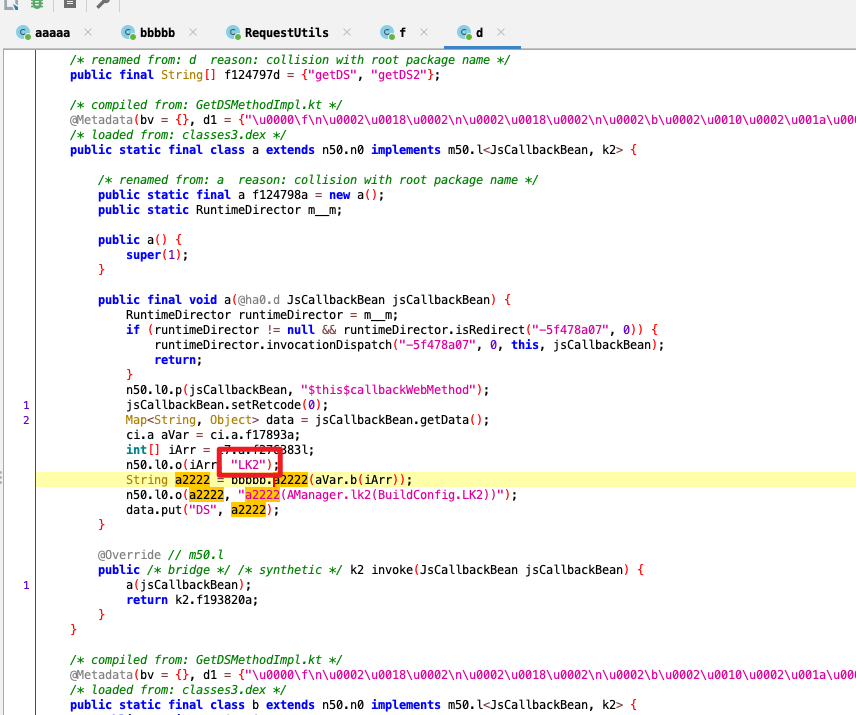

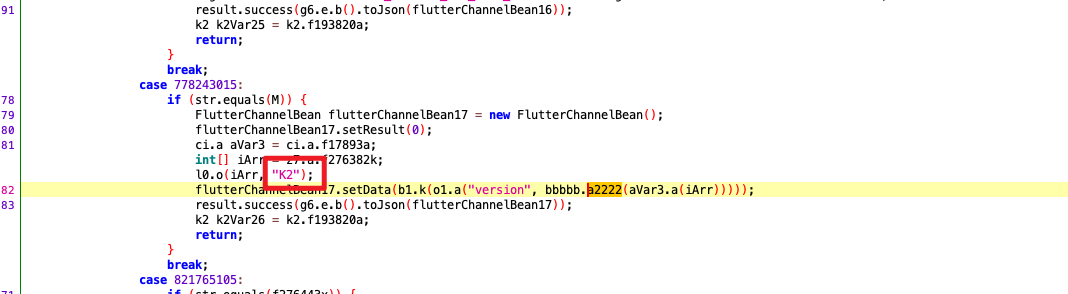

可以看到 DS LK2 K2 相关的加密算法,通过相关函数输入到 bbbbb.a2222 方法中,找到函数的参数,即为 salt。

下面以 DS 为例,把传入 bbbbb.a2222 方法的方法与参数拷贝出来,稍加修改,得到下面的代码:

1 | import java.util.ArrayList; |

运行后得到 pIlzNr5SAZhdnFW8ZxauW8UlxRdZc45r 即为 salt,带入到相关 api 验证一下,成功。

Ref

- https://github.com/skylot/jadx

- https://github.com/UIGF-org/mihoyo-api-collect/issues/1

- https://github.com/Azure99/GenshinPlayerQuery/issues/20